Mistral Small 3.1: The Leading Open-Source Multimodal AI Model Setting New Industry Standards

Discover how Mistral Small 3.1 outperforms competitors like GPT-4o Mini and Gemma 3 while offering multimodal capabilities, 128k context window, and Apache 2.0 licensing. Learn about its key features, use cases, and why this 24B parameter model is revolutionizing accessible AI in 2025.

Introduction: A New Era of Open-Source AI Excellence

In the rapidly evolving landscape of artificial intelligence, finding a model that combines state-of-the-art performance, multimodal capabilities, and open-source accessibility has been challenging—until now. Mistral AI has just announced Mistral Small 3.1, a groundbreaking 24B parameter model that delivers exceptional performance across text, images, and multilingual applications while maintaining the freedom of Apache 2.0 licensing.

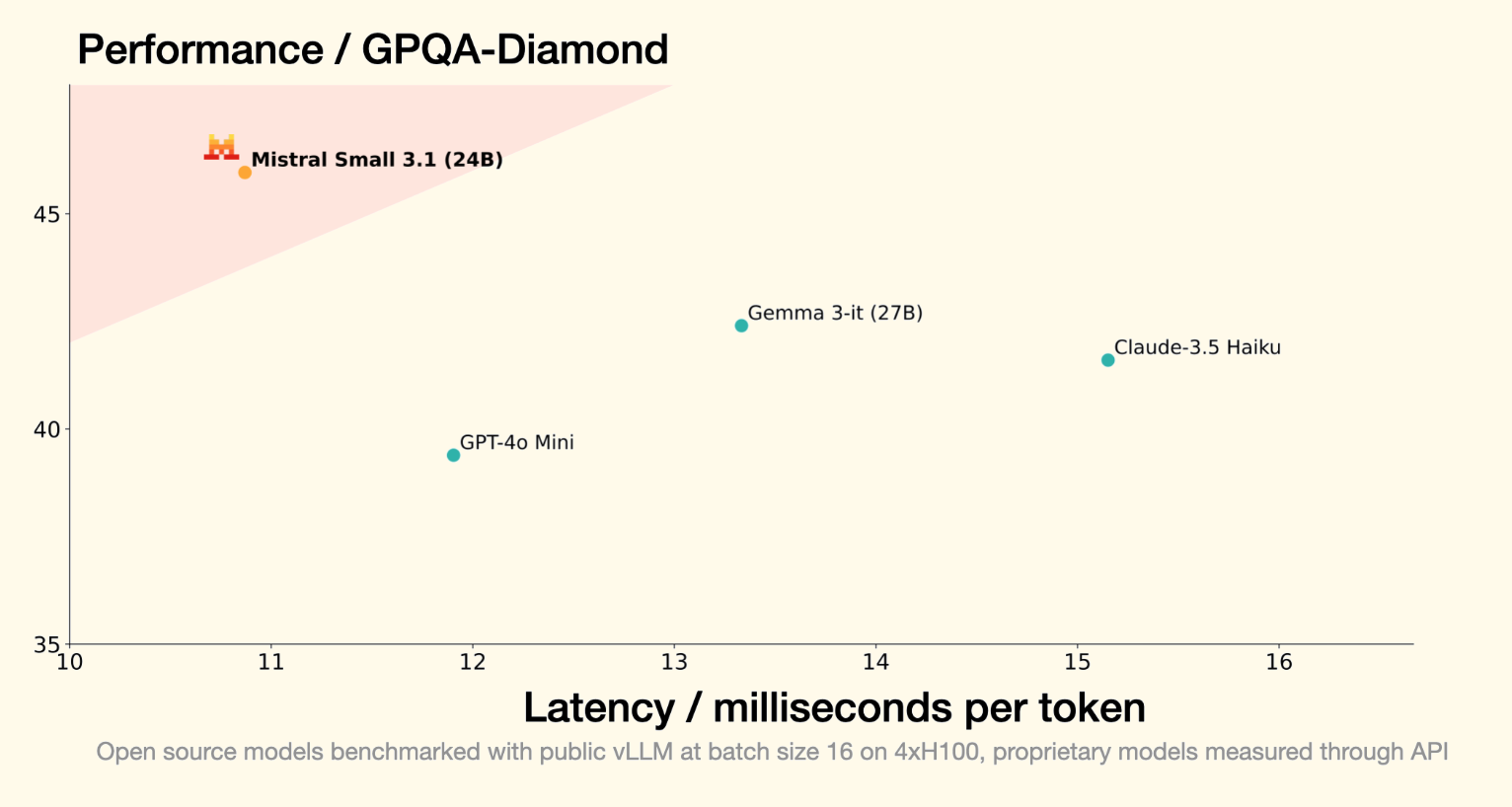

What makes Mistral Small 3.1 truly remarkable is how it outperforms comparable proprietary models like Google's Gemma 3 and OpenAI's GPT-4o Mini in benchmark tests, while offering impressive inference speeds of 150 tokens per second. This positions Mistral Small 3.1 as the undisputed leader in its weight class, pushing the boundaries of what's possible with accessible AI technology.

PART 1. Breaking Down Mistral Small 3.1's Competitive Edge

Superior Performance in a Lightweight Package

Mistral Small 3.1 builds upon its predecessor with significant improvements in performance metrics. According to comprehensive benchmark evaluations, this model surpasses competitors in text understanding, reasoning, and problem-solving capabilities. Most impressively, it achieves these results with just 24 billion parameters—a fraction of what larger models utilize.

The model's compact size doesn't compromise its capabilities. In fact, Mistral Small 3.1 runs efficiently on consumer hardware, including a single RTX 4090 GPU or a Mac with 32GB RAM. This accessibility democratizes advanced AI, making enterprise-level capabilities available to individual developers and smaller organizations without requiring specialized infrastructure.

Multimodal Understanding: Beyond Text-Only AI

What sets Mistral Small 3.1 apart from many open-source alternatives is its robust multimodal understanding. The model can process and interpret both textual and visual information, enabling more natural and comprehensive interactions. This capability opens doors to applications that were previously limited to proprietary systems, including:

- Document analysis with image components

- Visual question answering

- Image-based customer support

- Object detection and identification

- Visual content moderation

For developers building AI applications in 2025, multimodal capabilities have transformed from a luxury to a necessity. Mistral Small 3.1 delivers this critical functionality while maintaining the ethical transparency of open-source licensing.

Expanded Context Window: 128k Tokens for Complex Tasks

Modern AI applications often require handling long-form content, multiple documents, or extended conversations. Mistral Small 3.1's expanded context window of 128,000 tokens addresses this need, enabling the model to maintain coherence and accuracy across lengthy interactions.

This extended context capability allows for:

- Analysis of entire research papers

- Processing multiple documents simultaneously

- Maintaining consistent conversation history

- Summarizing complex legal documents

- Tracking complex narratives in creative writing

PART 2. Key Features and Capabilities for Practical Applications

Fast-Response Conversational Assistance

With inference speeds of 150 tokens per second, Mistral Small 3.1 delivers rapid responses that maintain user engagement. This makes it ideal for virtual assistants, customer support chatbots, and other applications where quick, accurate responses are essential.

Low-Latency Function Calling

For developers building complex workflows or agentic systems, Mistral Small 3.1 supports function calling with minimal latency. This feature enables seamless integration with external tools, databases, and APIs, enhancing the model's utility in production environments.

Customization Through Fine-Tuning

Mistral AI has released both base and instruct checkpoints for Mistral Small 3.1, providing developers with options for downstream customization. This approach facilitates fine-tuning for specialized domains, creating subject matter experts for fields like:

- Legal advice and document analysis

- Medical diagnostics and healthcare

- Technical support for specific products

- Financial analysis and consulting

- Educational content creation

Multilingual Support

In our globally connected world, language barriers can limit AI adoption. Mistral Small 3.1 addresses this challenge with robust multilingual capabilities, expanding its utility across international markets and diverse user bases.

PART 3. Industry Applications and Use Cases

Enterprise Solutions

For enterprise environments, Mistral Small 3.1 offers a compelling combination of performance and cost-efficiency. Key applications include:

- Document Processing: Extract, summarize, and analyze information from complex documents including text and images

- Customer Engagement: Build intelligent chatbots and virtual assistants that understand context and provide helpful responses

- Data Analysis: Interpret complex datasets and generate insights in natural language

- Content Creation: Generate high-quality content across multiple formats and languages

- Knowledge Management: Organize, retrieve, and synthesize information from corporate knowledge bases

Developer and Consumer Applications

Individual developers and consumer-facing applications benefit from Mistral Small 3.1's accessibility:

- On-Device AI: Run sophisticated AI capabilities directly on consumer hardware

- Creative Tools: Enhance applications for writing, coding, and content creation

- Educational Platforms: Develop personalized learning experiences with contextual understanding

- Research Assistants: Enable complex information retrieval and synthesis for academic and professional research

- Productivity Tools: Build intelligent systems for task management, scheduling, and workflow optimization

PART 4. Availability and Implementation

Mistral Small 3.1 is available through multiple channels to suit different implementation needs:

- Download directly from Hugging Face (Mistral Small 3.1 Base and Mistral Small 3.1 Instruct)

- Access via API through Mistral AI's developer playground, La Plateforme

- Deploy on Google Cloud Vertex AI for enterprise solutions

- Coming soon to NVIDIA NIM and Microsoft Azure AI Foundry

For organizations requiring private inference infrastructure with custom optimizations, Mistral AI offers enterprise-specific deployment options.

Conclusion: The Future of Accessible AI

Mistral Small 3.1 represents a significant milestone in the democratization of advanced AI capabilities. By combining state-of-the-art performance with open-source accessibility, this model empowers developers and organizations to build sophisticated AI applications without the limitations of proprietary systems.

As the AI landscape continues to evolve, models like Mistral Small 3.1 demonstrate that cutting-edge performance and ethical accessibility can coexist. Whether you're building enterprise solutions or personal projects, this versatile model offers the tools needed to create intelligent, responsive, and multimodal experiences.

The release of Mistral Small 3.1 under Apache 2.0 licensing reinforces a commitment to innovation that benefits the entire AI community. As developers explore and build upon this foundation, we can expect to see even more creative and impactful applications emerge.