How to Use DeepSeek R1 on Azure: A Practical Guide with Code Examples

DeepSeek R1 has emerged as one of the hottest topics in the AI community, and Microsoft recently made waves by announcing its integration into Azure AI Foundry. This groundbreaking development marks a significant milestone in making cutting-edge AI technology more accessible to developers and enterprises worldwide.

Leveraging DeepSeek R1 through Azure can be an excellent choice for organizations prioritizing service stability and regulatory compliance. Azure's robust infrastructure provides enterprise-grade reliability while ensuring adherence to various compliance standards. This practical guide will walk you through the essential steps to effectively integrate and utilize DeepSeek R1 in your Azure environment.

You can also access DeepSeek R1 on ChatHub directly

Deploying DeepSeek R1 Through Azure AI Foundry

- If you don't have an Azure subscription yet, you can sign up for an Azure account here.

- Navigate to Azure AI Foundry.

- Locate the Model Catalog in the left navigation pane and find DeepSeek R1.

- Open the model card and click the Deploy button.

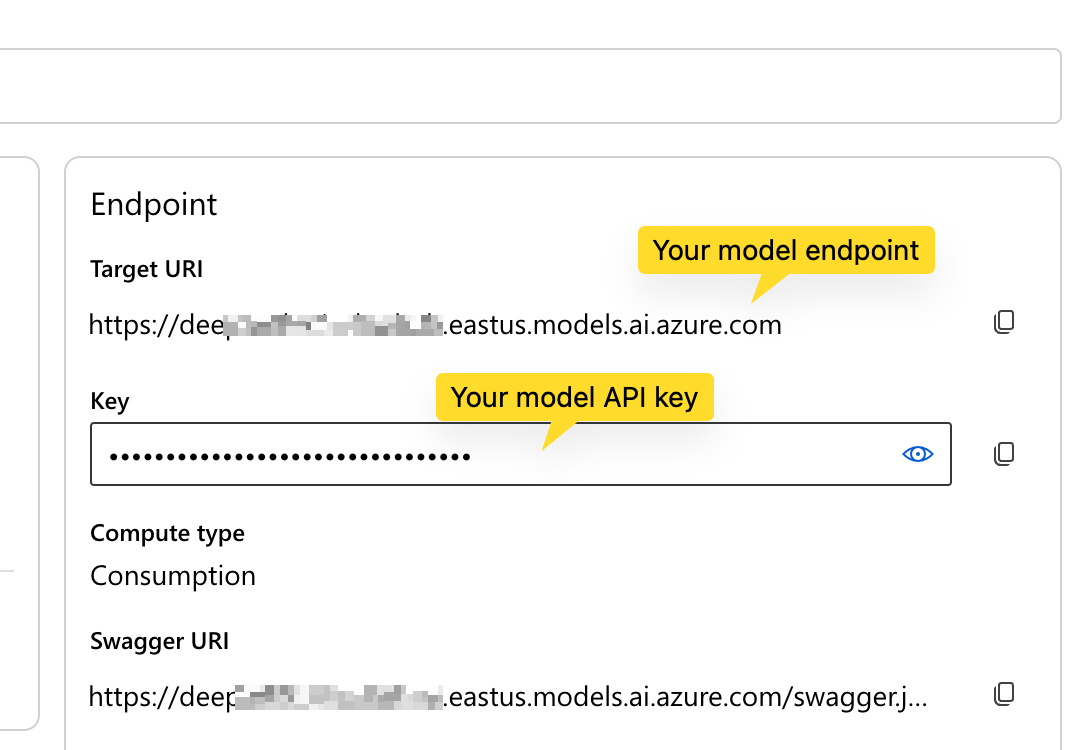

- You should land on the deployment page that shows you the API and key in less than a minute.

Access DeepSeek R1 using JavaScript SDK

Installation

npm install @azure-rest/ai-inference @azure/core-auth @azure/core-sse

Create model client

import ModelClient from '@azure-rest/ai-inference'

import { AzureKeyCredential } from '@azure/core-auth'

const client = new ModelClient(

'your model endpoint',

new AzureKeyCredential('your model api key'),

)

Generate response

const response = await client.path("/chat/completions").post({

body: {

messages: [{ role: "user", content: "Hello, DeepSeek!" }],

},

})

console.log(response.body.choices[0].message.content)Generate streaming response

const response = await client.path("/chat/completions").post({

body: {

messages: [{ role: "user", content: "Hello, DeepSeek!" }],

stream: true,

},

}).asNodeStream()

const stream = createSseStream(response.body)

for await (const event of stream) {

console.log(event.data)

}Full code example

import ModelClient from '@azure-rest/ai-inference'

import { AzureKeyCredential } from '@azure/core-auth'

import { createSseStream } from '@azure/core-sse'

const client = new ModelClient(

'your model endpoint',

new AzureKeyCredential('your model api key'),

)

const response = await client.path("/chat/completions").post({

body: {

messages: [{ role: "user", content: "Hello, DeepSeek!" }],

stream: true,

},

}).asNodeStream()

const stream = createSseStream(response.body)

for await (const event of stream) {

console.log(event.data)

}Conclusion

Throughout this guide, we've walked through the complete process of deploying and integrating the DeepSeek R1 model:

What We've Covered:

- Deploying DeepSeek R1 model on Azure AI Foundry

- Obtaining necessary credentials and endpoints

- Integrating with Azure AI SDK for JavaScript

- Implementing basic model interactions

🌟 Happy Coding! 🌟