Gemini 2.0 Flash Thinking: Evaluating Google’s Version Against ChatGPT-o1

Explore a comprehensive comparison between Google’s newly released Gemini 2.0 Flash Thinking and OpenAI’s ChatGPT-o1 Preview. Discover why Google’s latest AI model is generating significant buzz in the industry.

Part 1: What is Gemini 2.0 Flash Thinking?

The rapid advancements in artificial intelligence over recent years have been astounding, with tech giants like Google and OpenAI continuously pushing the boundaries of innovation. Recently, OpenAI introduced its o1 model series, the first in its lineup to leverage reinforcement learning. These models are uniquely designed to produce long reasoning chains before generating responses, enhancing their overall performance.

Today, Google unveiled Gemini 2.0 Flash Thinking, an experimental model engineered to solve complex problems at lightning speed while transparently demonstrating its reasoning process. Built on the core principles of 2.0 Flash for speed and performance, this model aims to showcase unparalleled reasoning capabilities. Jeff Dean, Google Research’s Chief Scientist, shared a demo on X (formerly Twitter), illustrating how the system solves physics problems while outlining its reasoning steps in real time.

On X (Twitter), users tested Gemini 2.0 Flash Thinking with image-based mathematical reasoning problems. It was able to respond quickly, correctly solve the problems, and provide its reasoning process.

As a direct competitor to OpenAI’s much-anticipated ChatGPT-o1 Preview, what sets Gemini 2.0 Flash Thinking apart? In the sections below, we’ll dive into a detailed comparison of these two cutting-edge AI models.

Part 2: Gemini 2.0 Flash Thinking vs. ChatGPT-o1 Preview — A Comprehensive Comparison

OpenAI’s ChatGPT has long dominated the generative AI space, establishing itself as the gold standard for conversational AI. However, with the debut of ChatGPT-o1 Preview and Gemini 2.0 Flash Thinking, competition in the space is intensifying. Below is a side-by-side evaluation of the two models across several critical dimensions:

1. Performance & Speed

- Gemini 2.0 Flash Thinking: As its name suggests, speed is Gemini 2.0’s core strength. Its optimized architecture is designed for rapid, large-scale processing, making it particularly effective in real-time applications such as brainstorming or customer service, where quick and accurate responses are essential.

- ChatGPT-o1 Preview: The new model by OpenAI introduces significant improvements in reasoning and fluency compared to GPT-4. However, users might still experience slight delays when handling particularly complex queries.

Winner: Gemini 2.0 Flash Thinking edges out with its ultra-low latency and robust multitasking capabilities.

2. Multimodal Input & Output

- Gemini 2.0 Flash Thinking: Google leads the field in multimodal AI capabilities. In addition to text and image inputs (a feature already supported by ChatGPT), Gemini seamlessly integrates video, maps, and real-time language translation. For example, after analyzing an uploaded image, Gemini can provide tailored marketing strategy suggestions by recognizing visual elements.

- ChatGPT-o1 Preview: While OpenAI has made notable progress handling text and image inputs, its adaptability to video or interactive media remains less advanced compared to Gemini.

Winner: Gemini 2.0 Flash Thinking triumphs, thanks to its broader multimodal support.

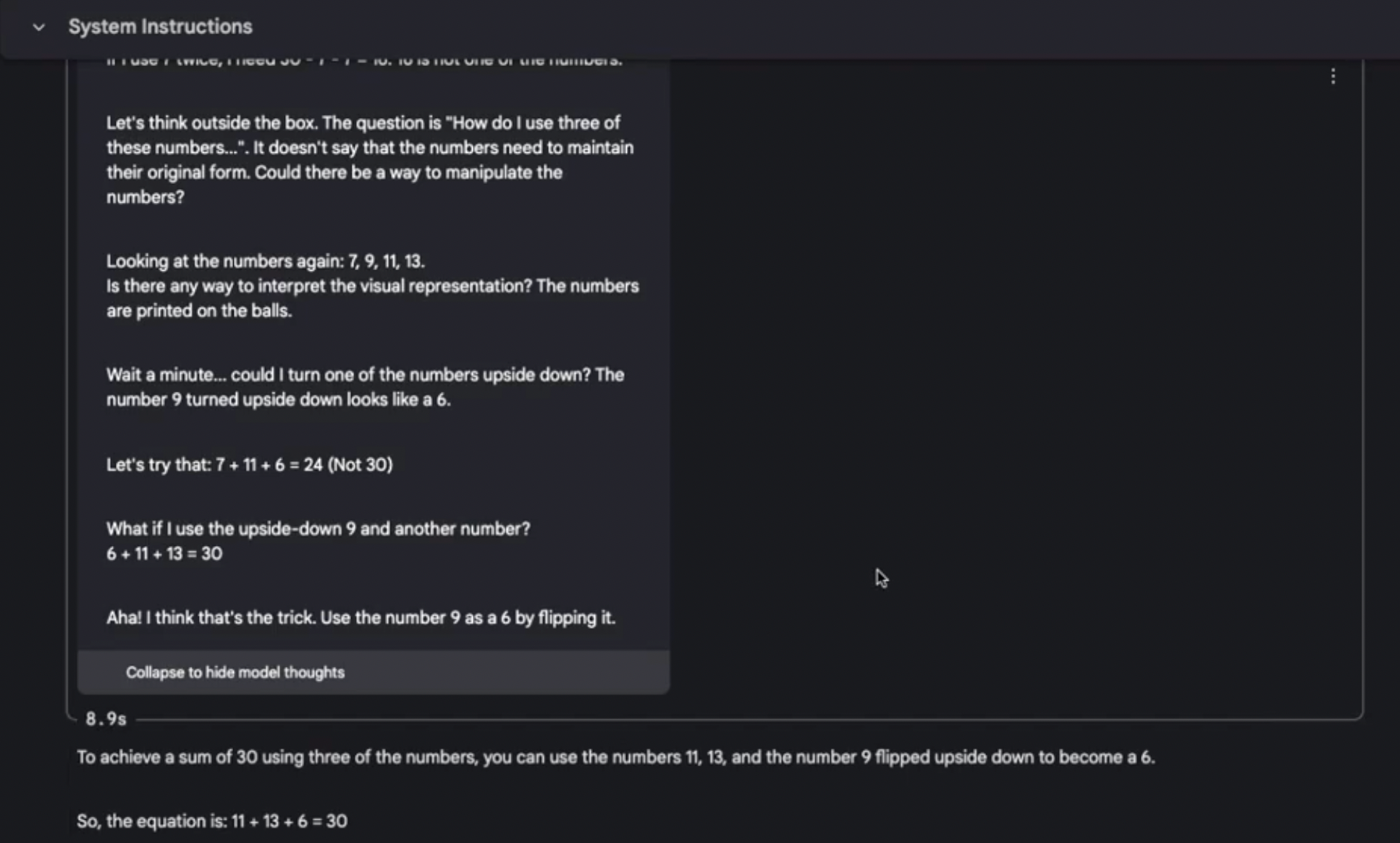

3. Reasoning Ability & Context Understanding

- Gemini 2.0 Flash Thinking: Focused on “flash thinking,” Gemini excels at accelerating the reasoning process. Users can expect outputs that are not only relevant but also delivered with exceptional speed. Whether solving complex mathematical problems or analyzing intricate datasets, Gemini balances precision and velocity.

- ChatGPT-o1 Preview: OpenAI’s latest version enhances ChatGPT’s context-awareness, making it well-suited for exploratory conversations, brainstorming, and deep philosophical discussions. However, in tasks requiring rapid processing of large datasets, its reasoning capabilities lag slightly behind Gemini’s speed-driven approach.

Winner: It’s a tie. ChatGPT-o1 Preview is better suited for nuanced conversational engagement, while Gemini 2.0 Flash Thinking excels in rapid problem-solving scenarios.

4. Ease of Integration

- Gemini 2.0 Flash Thinking: Google has strategically embedded Gemini into its ecosystem, including apps like Google Workspace, Android, and Chrome. For enterprises already using Google products, adopting Gemini is seamless and cost-effective.

- ChatGPT-o1 Preview: OpenAI’s tools are primarily offered as standalone solutions or integrated into Microsoft products like Office Suite (via the OpenAI-Microsoft partnership). While powerful, its ecosystem lacks the cohesive, wide-reaching integration Google provides.

Winner: Gemini 2.0 Flash Thinking stands out due to its deep integration into Google’s extensive range of products and services.

Part 3: One-Click Access to Gemini 2.0 Flash Thinking on ChatHub

One of the most exciting advancements in AI is now available: Gemini 2.0 Flash Thinking on ChatHub! In addition to using it on the official website, you can conveniently add ChatHub to your Google Chrome browser or download the app to your mobile phone.

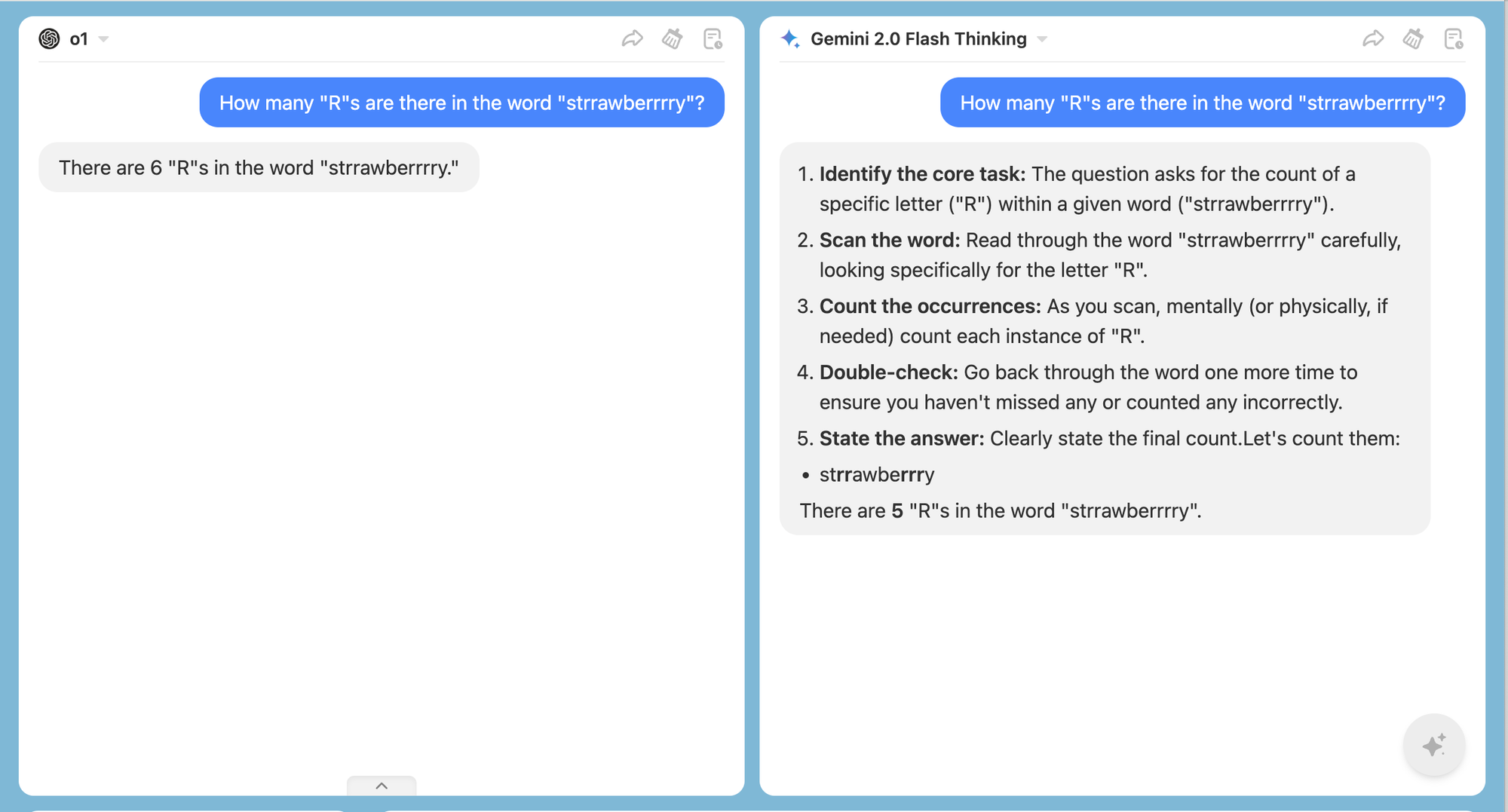

It can be observed that Gemini 2.0 Flash Thinking still encounters errors on certain issues. If you're interested in learning more about the differences and accuracy between ChatGPT o1 preview and Gemini 2.0 Flash Thinking in responding to the questions above, we warmly invite you to use ChatHub for the most straightforward and intuitive comparison!

Conclusion

The rivalry between Google’s Gemini 2.0 Flash Thinking and OpenAI’s ChatGPT-o1 Preview marks an exciting era of competition in artificial intelligence. While ChatGPT-o1 continues to shine in conversational depth and nuanced context handling, Gemini 2.0 Flash Thinking emerges as a formidable contender with its unparalleled speed, advanced multimodal features, and seamless ecosystem integration.

As both tech giants push the boundaries of generative AI, users and industries alike will benefit from this race to provide faster, smarter, and more versatile AI solutions.