ENIAC’s Legacy: A Journey from 1946 to the Dawn of Artificial General Intelligence (AGI)

Explore the groundbreaking history of ENIAC, the world's first programmable computer, from its invention in 1946 to its lasting impact on AI and the development of Artificial General Intelligence (AGI).

1946: The Birth of ENIAC and the Beginning of a Digital Revolution

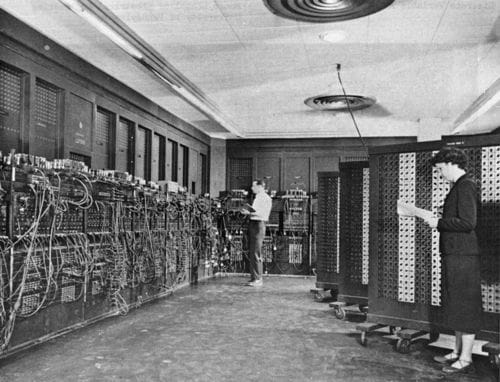

Seventy-nine years ago, on this day in 1946, the world witnessed a technological breakthrough that would forever alter the trajectory of human history: the unveiling of ENIAC (Electronic Numerical Integrator and Computer). Often hailed as the first programmable general-purpose computer, ENIAC marks a cornerstone in the evolution of computing. Let’s dive into its creation, its influence on artificial intelligence (AI), and how it laid the foundation for our pursuit of Artificial General Intelligence (AGI).

What Was ENIAC?

ENIAC, often considered the pioneer of modern computing, was developed by two brilliant inventors, John W. Mauchly and J. Presper Eckert. This massive machine was built at the University of Pennsylvania under a U.S. Army contract and was designed to calculate artillery trajectories during World War II. Completed in 1946 after three years of development, ENIAC transformed computation, performing tasks in seconds that would take human mathematicians weeks or even months.

- Key Features of ENIAC:

- Size: ENIAC weighed over 27 tons and occupied a room measuring 1,800 square feet.

- Components: It contained 17,468 vacuum tubes, 70,000 resistors, and 10,000 capacitors.

- Speed: ENIAC could perform 5,000 additions or 357 multiplications per second—truly remarkable for its time.

For perspective, ENIAC was not just a calculator but a programmable digital computer, capable of being reconfigured to tackle a variety of problems. Its creation marked the beginning of the first digital revolution and paved the way for all subsequent advancements in computing.

The Invention of ENIAC and Its Legacy

The inventors of ENIAC, Mauchly and Eckert, envisioned a future where machines could compute faster and more accurately than ever before. While their initial focus was assisting military efforts, they sparked a chain reaction in the scientific community. ENIAC was the prototype for future digital hardware and software.

A few ways ENIAC left its mark on history include:

- Inspiration for Modern Computers: ENIAC inspired machines like the UNIVAC and IBM's early computers, solidifying the architecture of computing as we know it.

- Driving Innovation in Programming: Its development led to the use of modern programming concepts, influencing later languages and systems.

- Laying the Foundation for AI: ENIAC’s ability to process data at unprecedented speeds set the stage for artificial intelligence researchers to imagine computational systems capable of simulating human thought and learning.

From ENIAC to Artificial Intelligence

The transition from ENIAC to the development of artificial intelligence (AI) represents one of the most significant leaps in technological history. By the 1950s, researchers were already asking: Can machines think? The invention of early computers like ENIAC provided a framework for scientists to explore this possibility.

How ENIAC Influenced AI Development

- Increased Computational Power: ENIAC’s ability to perform complex calculations faster than humans encouraged researchers to explore how machines could mimic cognitive processes such as reasoning and problem-solving.

- Data Processing Models: The machine’s design inspired the concept of processing instructions iteratively—a fundamental characteristic of machine learning algorithms.

- Programming Paradigms: Programming ENIAC, though arduous, introduced concepts like conditional statements and loops, which are the backbone of today’s AI software.

Since ENIAC’s time, AI has evolved into one of the most transformative fields in science, propelling advancements in industries as diverse as healthcare, finance, transportation, and entertainment.

ENIAC to AGI: A New Frontier

The progression from ENIAC to Artificial General Intelligence (AGI) is awe-inspiring. AGI, unlike narrow AI, aims to create machines that can perform any intellectual task a human being can do. While ENIAC primarily automated calculations, AGI seeks to automate thinking itself.

Understanding AGI

Artificial General Intelligence refers to systems that possess the ability to learn, understand, and apply knowledge autonomously, across any domain—just like a human. AGI would not only perform specific tasks like narrow AI (e.g., GPT-4 or self-driving systems) but would also reason, strategize, and adapt to new challenges independently.

Linking the Past to the Future

ENIAC’s immense legacy is best reflected in the trajectory of modern AI and the pursuit of AGI. Here’s how the journey connects:

1. The Vision of Universal Machines

ENIAC introduced the idea of a machine that could perform any task it was instructed to do, albeit with manual programming. Today’s AI systems, particularly deep learning models, embody that spirit but operate at much higher levels of abstraction and efficiency.

2. Scaling Computational Power

ENIAC was limited by its vacuum tube technology, whereas modern AI benefits from advances in semiconductors, quantum computing, and cloud storage. This exponential increase in computational power is what makes AGI a tangible goal.

3. The Unstoppable Pursuit of Innovation

From ENIAC’s inventors to today’s AI pioneers, the drive to create systems that complement and enhance human intelligence has remained constant. AGI represents the next chapter in realizing that vision.

Challenges and Opportunities in Achieving AGI

While ENIAC proved that machines could automate calculations, AGI faces significantly higher hurdles. These include:

- Ethical Concerns: AGI raises questions about control, rights, and responsibilities in a world where machines may rival human intellect.

- Technical Complexity: Humans have yet to understand how the brain works fully, let alone replicate it in machine form.

- Resource Needs: Achieving AGI necessitates vast amounts of data, energy, and computational power—all of which carry environmental and logistical implications.

On the flip side, AGI promises countless transformative benefits, such as solving climate change, curing diseases, and potentially unlocking humanity’s greatest mysteries.

Looking Back and Moving Forward

As we celebrate the timeless legacy of ENIAC, it’s essential to acknowledge how its 1946 invention laid the groundwork for everything from personal computers to smartphones to cutting-edge AI systems. Mauchly and Eckert’s innovation shaped the 20th century, while the ripple effects are creating the future of artificial general intelligence in the 21st century and beyond.

Though AGI remains a vision for now, the tools and principles established nearly eight decades ago with ENIAC serve as a constant reminder: Technology has limitless potential to transform society—if harnessed responsibly.

Conclusion

From the hum of ENIAC’s vacuum tubes to the neural networks of today’s AI, our journey is far from over. Each day brings us closer to machines that don’t just compute but truly comprehend. As we reflect on the past and look toward AGI, one thing is certain: innovation remains our greatest asset in building a future worthy of ENIAC’s groundbreaking legacy.

If you're interested in AI, come and experience the charm of artificial intelligence in ChatHub.