DeepSeek, Gemini, or OpenAI—Who Will Stand Out in the End?

ChatHub integrates all mainstream AI models in one platform—experience it now!

The AI landscape is witnessing a seismic shift as newcomers like DeepSeek disrupt the dominance of established giants like OpenAI and Google Gemini. With explosive growth in user traffic, groundbreaking cost-efficiency, and a relentless focus on transparency, DeepSeek has surged to become the second-most popular AI chatbot globally, overtaking Gemini in daily visits. But can it sustain this momentum against trillion-dollar rivals? Let’s dissect the battle reshaping AI’s future.

The AI Market’s New Contender: DeepSeek’s Meteoric Ascent

According to SimilarWeb, DeepSeek.com’s daily visits skyrocketed 614% in a single week, hitting a record 49 million visits by late January 2024—surpassing Google Gemini’s 1.5 million U.S. daily visits. While still trailing OpenAI’s ChatGPT (19.3 million U.S. visits) and Microsoft’s Bing-Copilot hybrid, DeepSeek’s growth is unparalleled: from 300,000 daily visits in early January to 33.4 million by January 27, it now commands 9.2% of the AI chat market, triggering a domino effect across the sector.

Key Drivers of DeepSeek’s Success:

- Cost-Efficiency: DeepSeek’s open-source models undercut competitors on pricing, offering enterprise-grade inference at a fraction of the cost.

- Transparency: Unlike closed models like GPT-4, DeepSeek discloses its reasoning steps, enabling businesses to fine-tune outputs.

- Niche Dominance: It briefly dethroned ChatGPT as the top-rated free iOS app in the U.S., signaling its consumer appeal.

However, rapid scaling has strained its infrastructure. The platform recently paused API service upgrades due to “server resource shortages,” highlighting the challenges of sustaining hypergrowth.

The Trillion-Dollar Elephant in the Room: OpenAI and Google’s Counterattacks

Despite DeepSeek’s rise, OpenAI and Google retain formidable advantages:

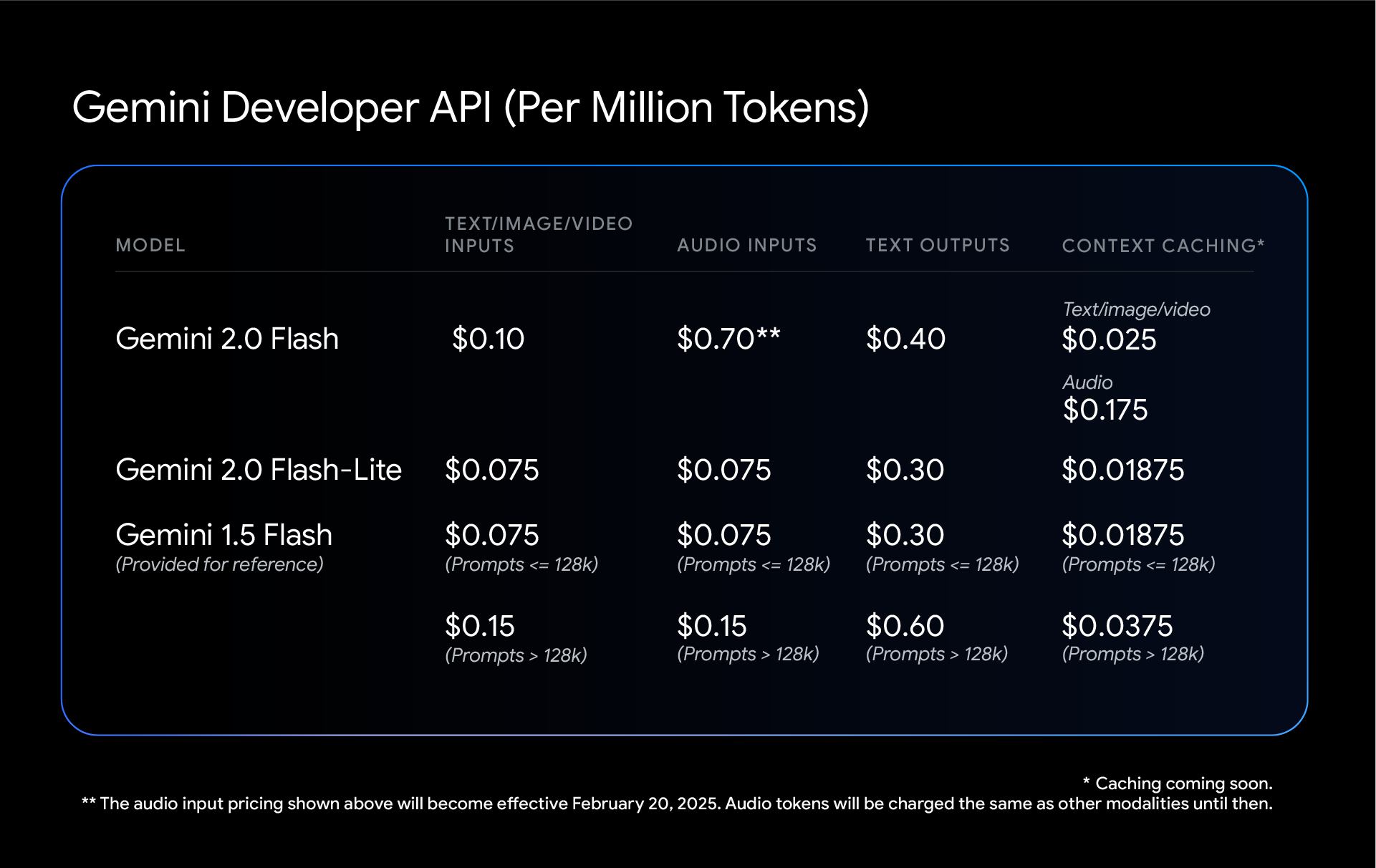

*Google’s Gemini 2.0 series now targets DeepSeek’s budget niche with aggressive pricing, while OpenAI’s “Deep Research” feature leverages advanced web crawling for hyper-personalized RAG workflows.

Google CEO Sundar Pichai downplays DeepSeek’s threat, citing Gemini’s superior “efficiency” and financial muscle. Yet, DeepSeek’s open-source strategy poses a unique risk: enterprises can use its DeepSeek-R1 as a “teacher model” to build smaller, task-specific AI agents—a game-changer for SMEs lacking OpenAI’s budget.

The Real Battleground: Custom AI Agents and Data Quality

The trillion-dollar question isn’t which model wins, but how businesses harness tools like knowledge distillation, RAG, and SFT to build domain-specific AI:

- Knowledge Distillation:

- DeepSeek-R1 allows companies to create compact models that retain o1-level reasoning for specialized tasks (e.g., medical diagnostics or legal analysis).

- Retrieval-Augmented Generation (RAG):

- Hybrid approaches combining RAG with fine-tuning reduce hallucinations. While DeepSeek’s hallucination rate is 14% (vs. OpenAI’s 8%), integrating proprietary data bridges the gap.

- Data Quality:

- As American Express CTO Hilary Packer notes, “Clean data is the bedrock of AI success.” Companies must prioritize structuring datasets for RAG, SFT, or RL workflows.

Wharton professor Ethan Mollick predicts AI’s next frontier is “personality”—models tailored to reflect brand voice or user preferences. Startups like Vectara already help businesses optimize RAG pipelines, signaling a shift from one-size-fits-all models to niche AI agents.

AI Chips: The Hidden Catalyst

DeepSeek’s rise isn’t just reshaping software—it’s fueling demand for inference-optimized hardware. While NVIDIA dominates AI training GPUs, rivals like Etched see gold in inference chips:

- Training vs. Inference: Training builds algorithms; inference deploys them. As enterprises adopt smaller models (e.g., GPT-4oMini, Gemini Flash), demand for cost-efficient inference chips will soar.

- Jevons Paradox: Cheaper, efficient models could increase total compute consumption, creating opportunities for AMD, Intel, and startups.

Etched co-founder Robert Wachen reports surging interest since DeepSeek’s launch, underscoring the $100B inference chip market’s potential.

The Verdict: Survival of the Most Adaptable

DeepSeek’s disruption proves that cost and transparency matter, but long-term victory requires:

- Infrastructure Investment: Can DeepSeek match OpenAI’s $800B data center roadmap?

- Regulatory Navigation: Can it avoid Gemini’s privacy pitfalls in Western markets?

- Innovation Beyond Pricing: Features like Deep Research and multi-modal Gemini Ultra keep rivals ahead.

For businesses, the takeaway is clear: Leverage distillation, RAG, and clean data to build tailored AI agents—or risk obsolescence. As Sam Witteveen notes, “The future is multi-model,” where budget-friendly tools handle 80% of tasks, reserving premium models for edge cases.

In this high-stakes race, adaptability—not size—will crown the winner.